2009 November 11

HVM Xen Architecture

- Xen Hardware Virtual Machine Guests

Until virtualization support was introduced into recent hardware, the only means to allow concurent systems to be using the same virtual machine was only through methods such as paravirtualization or even binary rewriting. Both of this involved a deep understanding of the architecture of the virtualized guest system, and in the case of PV guests changes were added to the original source code, meaning that the operating system kernel had to be rebuilt. Xen supports guest domains running unmodified guest operating systems, like closed source Windows systems, using virtualization extensions available on recent processors, such as the Intel Virtualization Extension (Intel-VT) or the AMD extension (AMD-V).

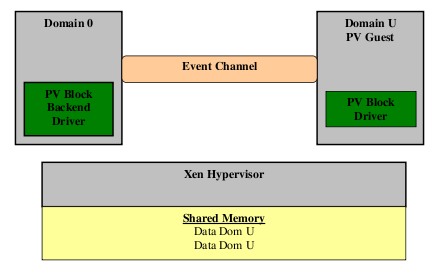

The main difference between a Paravirtualized Guest and a HVM Guest it’s in the way the are accesing hardware resources, expecially I/O devices like disk access. A PV guest’s paravirtualized kernel will make hypercalls (in a very similar fashion to a basic system call), handled by the Xen hypervisor handler (in ring 0) which forwards the requests to the real device drivers in Linux Dom0 kernel, that makes the actual I/O request to the hardware. A higher level abstraction of this mechanism is implemented as event channels and shared memory buffers between Guest PV and Dom0 in order to communicate requests and data. The shared memory services are offerred by the hypervisor software that handles all of the memory layout of the machine, including the mappings from guest physical/virtual to true physical addresses through the management of the TLB entries in the MMU.

Paravirtualized Guest Achitecture

When it comes to fully virtualized guests things get more trikier. One thing that you should keep in mind on on x86 and ia64 architecture that all exceptions and external interrupts are configured by Xen to go to the hypervisor’s software in ring 0. One of the basic tasks of a minialist hypervisor is to separate the guests by keeping all the memory layout separated to prevent unallowed access between virtual machines, as a normal operating system does with userspace processes, and to emulate the instructions that the virtualized guest running in unpriviledged levels does not have access to. For example an guest operating system cannot use a hypercall any more to set up a required TLB mapping as it is usually done with the paravirtualized guests, instead it has to rely on the hypervisor to emulate it’s TLB mapping instruction. Let’s suppose a Windows Vista process accesses an unmapped address. Normally this page miss will trap in the Windows NT kernel and the MMU module for the architecture that is running will walk the PTE entries for that process and will use a ring 0 instruction to add the entry in the TLB from guest virtual (userspace address) to guest physical that the windows kernel knows to have, after if it has copied that memory into physical memory from swap if needed. But now the Windows kernel is running in a VM entry ring (Intel’s description), and the current virtual CPU that the hardware is emulating does not have access to the TLB mapping instruction, and this will cause a VM exit exception that will be handled by the hypervisor software. It will emulate the instructions by looking in it’s own PTE for that current guest (virtual machine) and add a TLB entry from the guest userspace original virtual address to the true machine physical address. There is a catch though: this is not the normal case and the Page Miss exception does not go directly to the Vista kernel. Instead it goes to the hypevisor software, which can add the correct TLB entry transparently if it is already aware of the translation or can it can inject a Page Miss exception to the Windows kernel in VM space, by jumping to the windows page miss handler and changing the current context to the one of the Virtual Machine.

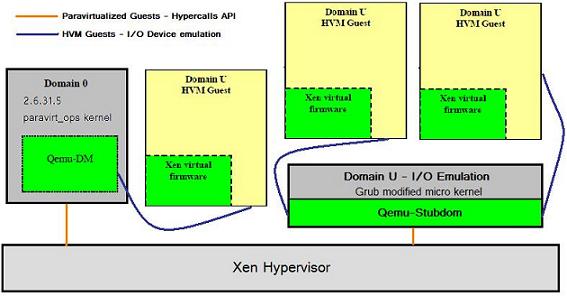

The same emulation must be made for some I/O devices. Due to the fact that some hardware devices must be used by all the Virtual Machines running on the platform, the access to the actual I/O space must be multiplexed. In order to do this there has to be one central zone where all requests are received, and, in Xen’s case, this is done in Dom0 Linux. But as before for a HVM guest instruction that access this I/O space, like PCI addreses and ports, must be caught by the hypervisor software and emulated. In order that the hypervisor and the guest operating system to have a common knowledge of the virtual’s machines interrupt and device layout and I/O addresses a virtual firmware is added to the HVM guest at boot time, by copying the custom BIOS into the physical address space of the guest. The guest firmware (BIOS) provides the boot services and run-time services required by the OS in the HVM. This guest firmware does not see any real physical devices, it operates on the virtual devices provided by the device models. You can find the source code for the default firmware used by Xen in xen3.4.1/tools/firmware/hvmloder directory. As you can see from the source code things like the PCI bus, ACPI, virtual APIC and other things are set up using paravirtualized code (xen hypercalls) by the firmware BIOS code. In this way the hypervisor knows what are the addresses where the Windows kernel installed interrupt handlers, the physical guest addresses where the operating system has set up the emulated devices and so on. Then the hypervisor software can restrict the guest from accesing these physical addresses (in guest physical space) so it can trap and emulate the instruction using the virtual drivers it provides. So if, for example, the Windows Kernel block module wants to write some data to a physical drive, it will issue a DMA operation by writing at a certain PCI address where it has mapped that physical devices as read from the BIOS. Hypervisor traps this access and will decode the I/O request that the guest has issued. Now when it comes to emulating this things follow different paths. VmWare’s solution is to keep the actual device emulation into hypervisor software for performance considerations. Xen’s solution is to use the Qemu emulator expecially compiled for this, since one purpose of an userspace emulator is to handle I/O request from the target by decoding the current instruction the guest run that trapped into the emulator code.

Hardware Virtual Machine Guest Architecture

Now one thing to note is that Qemu had already code for emulating basic I/O buses like ide hard drives, several network cards (PCI or ISA cards on the PC target), PCI UHCI USB controller for guest using USB devices, a Vesa video card for graphics and much more. This was done first by setting up a custom BIOS in the guest physical image/address space in order to trick the guest to use and install drivers for the emulated hardware, that Qemu knows, just like it does when it boots on that specific machine. So what needed to be done in order for Qemu to work with Xen was to add support for the interrupts that came from the hypervisor from the real devices to Qemu, either working in a process or in a custom I/O guest domain, and for Qemu to use the actual backend drivers in dom0 or in a domU, depending on it’s compiling options. You can see the new 2 custom targets added by Xen development team in xen-3.4.1\tools\ioemu-qemu-xen\i386-dm for the Qemu port to run in a Dom0 process, emulating the I/O for a single HVM guest domain, and in xen-3.4.1\tools\ioemu-qemu-xen\i386-stubdom for the port to run in a custom I/O guest domain that can be used to emulate more than one HVM guest. You can see both ports using paravirtualized code to talk to Xen through hypercalls. Also you may also take a look at xen-3.4.1\stubdom directory to see how the custom guest I/O domain is set up, including custom Grub image for booting just Qemu using a micro kernel environment. Unfortunatly the HVM machine will only have a list of the basic hardware emulated by Qemu, like the Cirrus Logic GD5446 Video card or the VESA VBE virtual graphic card (option ‘-std-vga’) for higher resolutions, so no games on my HVM guest, at least not yet :).